Maximum likelihood estimation

Mar 18, 2025

Announcements

HW 03 due March 20 at 11:59pm

Project exploratory data analysis due March 20 at 11:59pm

- Next milestone: Project presentations in lab March 28

Statistics experience due April 22

Topics

Likelihood

Maximum likelihood estimation (MLE)

MLE for linear regression

Motivation

- We’ve discussed how to find the estimators of

Today we will introduce another way to find these estimators - maximum likelihood estimation.

We will see the least-squares estimator is equal to the maximum likelihood estimator when certain assumptions hold

Maximum likelihood estimation

Example: Basketball shots

Suppose the a basketball player shoots the ball, such that the probability of making the basket (successfully making the shot) is

What is the probability distribution for this random phenomenon?

Suppose the probability is

Suppose the probability is

Shooting the ball three times

Suppose the player shoots the ball three times. They are all independent and the player has the same probability

Let

Suppose the probability is

Suppose the probability is

Shooting the ball three times

Suppose the player shoots the ball three times. They are all independent and the player has the same probability

The player shoots the ball three times with the outcome

New question: What parameter value of

We will use a likelihood function to answer this question.

Likelihood

A likelihood function is a measure of how likely we are to observe our data under each possible value of the parameter(s)

Note that this is not the same as the probability function.

Probability function: Fixed parameter value(s) + input possible outcomes

- Given

- Given

Likelihood function: Fixed data + input possible parameter values

- Given we’ve observed

- Given we’ve observed

Likelihood: Three basketball shots

The likelihood function for the probability of a basket

Thus, if the likelihood for

Likelihood: Three basketball shots

What is the general formula for the likelihood function for

How does assuming independence simplify things?

How does having identically distributed data simplify things?

Likelihood: Three basketball shots

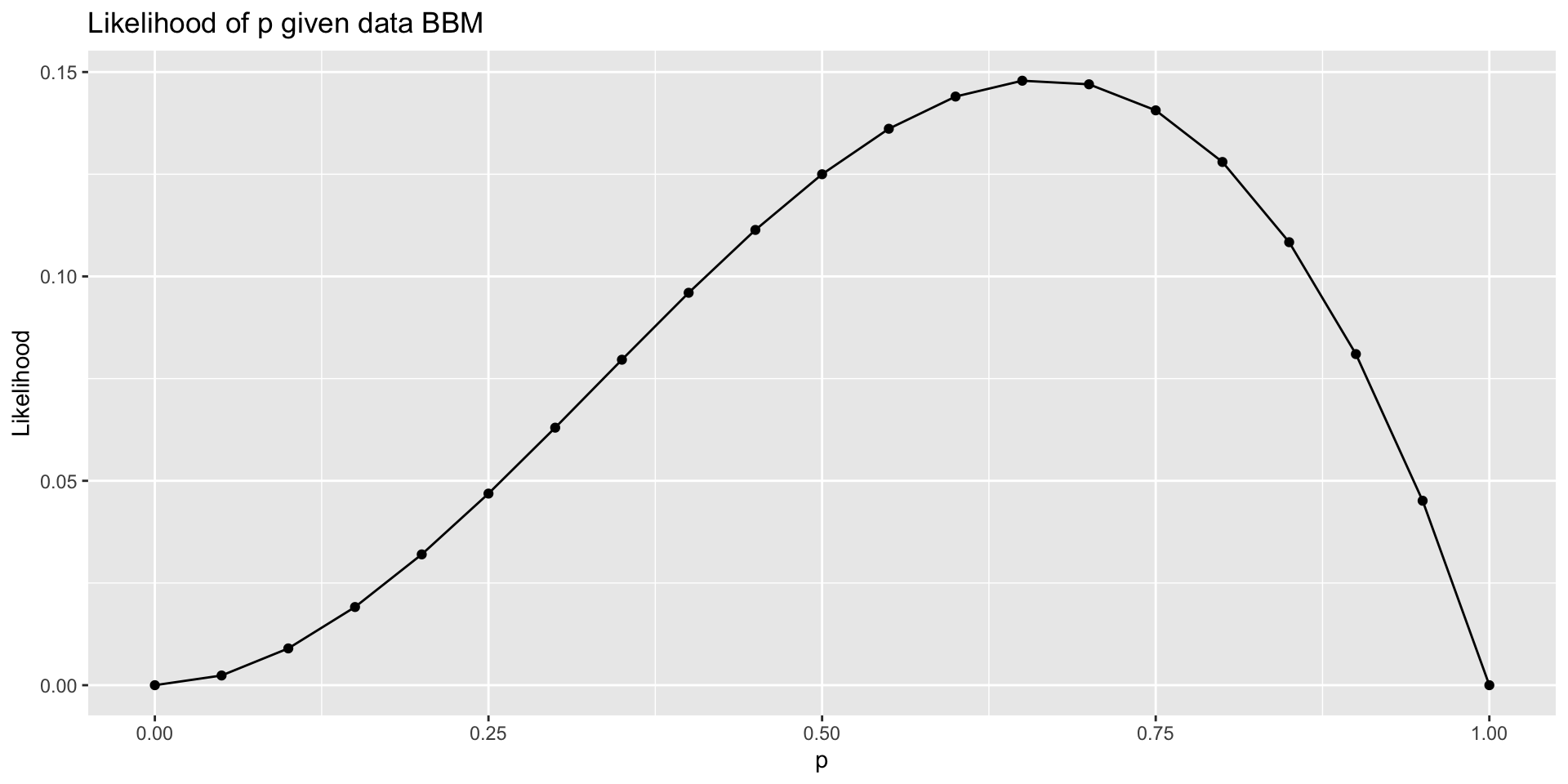

The likelihood function for

We want of the value of

The process of finding this value is maximum likelihood estimation.

There are three primary ways to find the maximum likelihood estimator

Approximate using a graph

Using calculus

Numerical approximation

Finding the MLE using graphs

What do you think is the approximate value of the MLE of

Finding the MLE using calculus

- Find the MLE using the first derivative of the likelihood function.

- This can be tricky because of the product rule, so we can maximize the log(Likelihood) instead. The same value maximizes the likelihood and log(Likelihood).

Use calculus to find the MLE of

Shooting the ball

Suppose the player shoots the ball

Suppose the player makes

- What is the formula for the probability distribution to describe this random phenomenon?

- What is the formula for the likelihood function for

- For what value of

MLE in linear regression

Why maximum likelihood estimation?

“Maximum likelihood estimation is, by far, the most popular technique for deriving estimators.” (Casella and Berger 2024, 315)

MLEs have nice statistical properties (more on this next class)

Consistent

Efficient

Asymptotically normal

Note

If the normality assumption holds, the least squares estimator is the maximum likelihood estimator for

Linear regression

Recall the linear model

- We have discussed least-squares estimation to find

- We have used the fact that

- Now we will discuss how we know

Simple linear regression model

Suppose we have the simple linear regression (SLR) model

such that

We can write this model in the form below and use this to find the MLE

Side note: Normal distribution

Let

SLR: Likelihood for

The likelihood function for

Log-Likelihood for

The log-likelihood function for

MLE for

1️⃣ Take derivative of

MLE for

2️⃣ Find the

After a few steps…

MLE for

3️⃣ We can use the second derivative to show we’ve found the maximum

Therefore, we have found the maximum. Thus, MLE for

Note that

MLE for

We can use a similar process to find the MLEs for

Note:

MLE in matrix form

MLE for linear regression in matrix form

- For a fixed value of

- What does this tell us about the relationship between the MLE and least-squares estimator for

Putting it all together

The MLE

MLEs have nice properties, so this means the least-squares estimator

The MLE

References