Logistic Regression: Model comparison

Apr 03, 2025

Announcements

HW 04 due April 10 - released later today

Team Feedback (email from TEAMMATES) due Tuesday, April 8 at 11:59pm (check email)

Next project milestone: Analysis and draft in April 11 lab

Statistics experience due April 22

Questions from this week’s content?

Topics

Comparing models using AIC and BIC

Test of significance for a subset of predictors

Computational setup

Risk of coronary heart disease

This data set is from an ongoing cardiovascular study on residents of the town of Framingham, Massachusetts. We want to examine the relationship between various health characteristics and the risk of having heart disease.

high_risk:- 1: High risk of having heart disease in next 10 years

- 0: Not high risk of having heart disease in next 10 years

age: Age at exam time (in years)totChol: Total cholesterol (in mg/dL)currentSmoker: 0 = nonsmoker, 1 = smokereducation: 1 = Some High School, 2 = High School or GED, 3 = Some College or Vocational School, 4 = College

Modeling risk of coronary heart disease

Using age, totChol, and currentSmoker

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | -6.673 | 0.378 | -17.647 | 0.000 | -7.423 | -5.940 |

| age | 0.082 | 0.006 | 14.344 | 0.000 | 0.071 | 0.094 |

| totChol | 0.002 | 0.001 | 1.940 | 0.052 | 0.000 | 0.004 |

| currentSmoker1 | 0.443 | 0.094 | 4.733 | 0.000 | 0.260 | 0.627 |

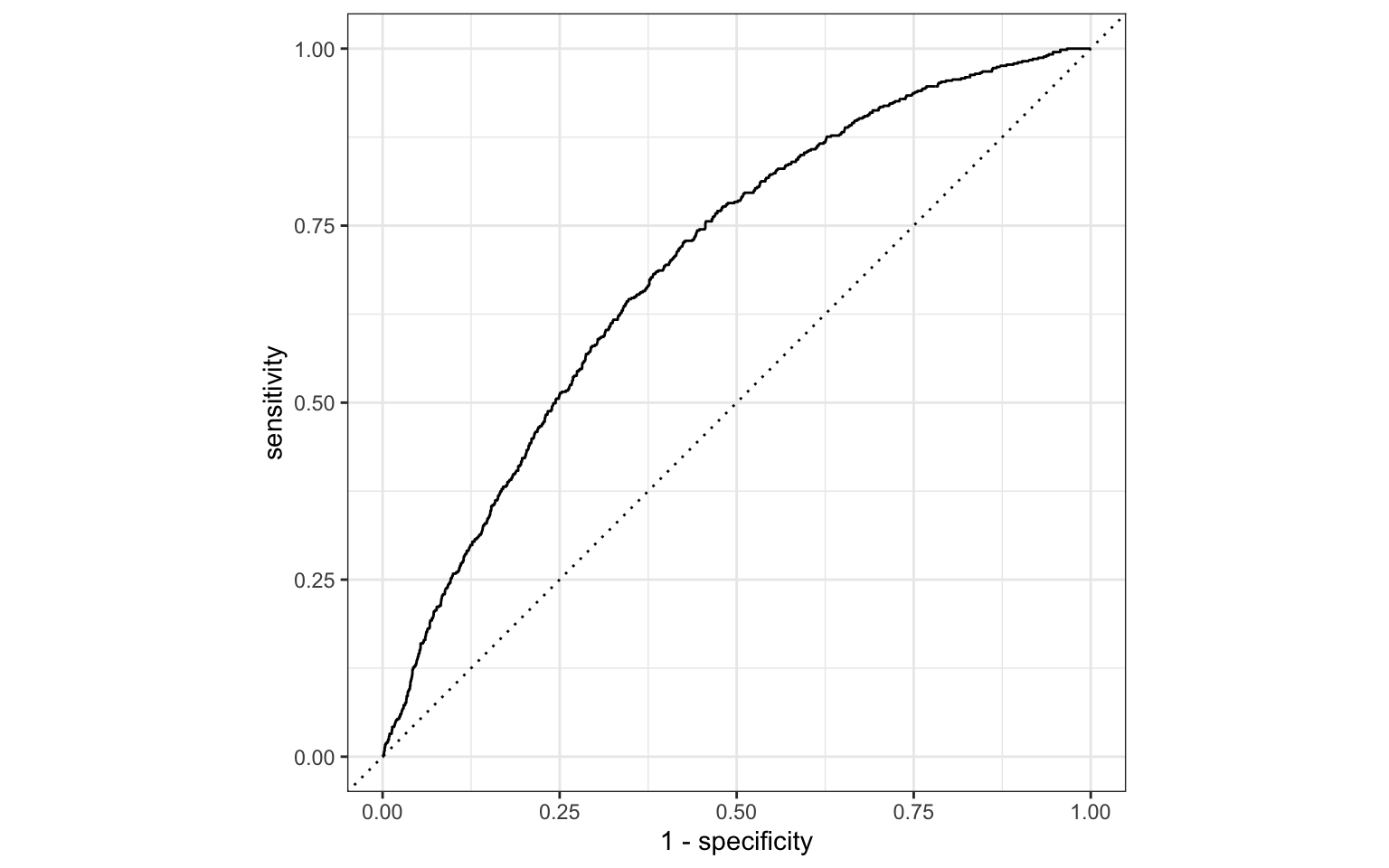

Review: ROC Curve + Model fit

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 roc_auc binary 0.697

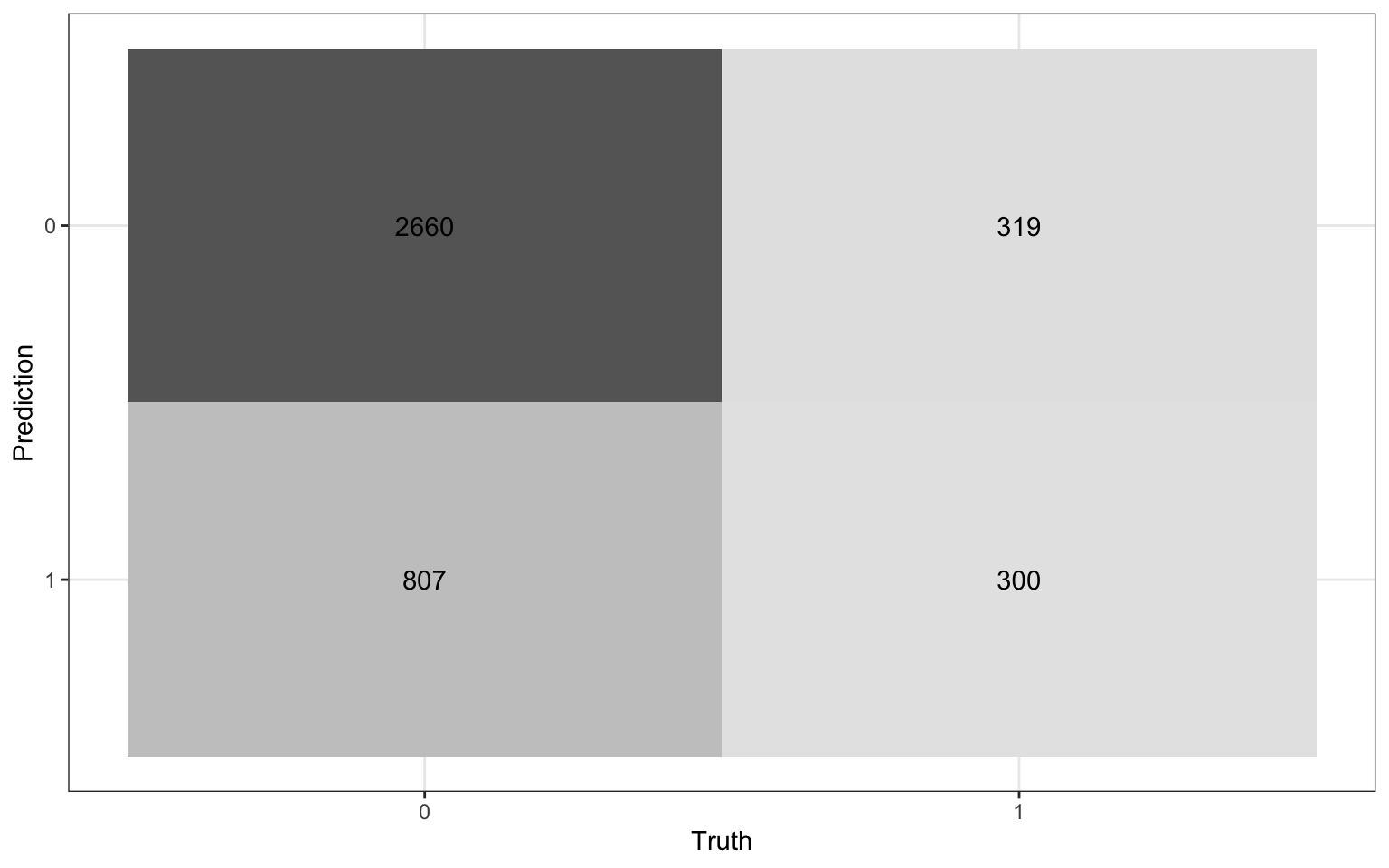

Review: Classification

We will use a threshold of 0.2 to classify observations

Review: Classification

Compute the misclassification rate.

Compute sensitivity and explain what it means in the context of the data.

Compute specificity and explain what it means in the context of the data.

Model comparison

Which model do we choose?

| term | estimate |

|---|---|

| (Intercept) | -6.673 |

| age | 0.082 |

| totChol | 0.002 |

| currentSmoker1 | 0.443 |

| term | estimate |

|---|---|

| (Intercept) | -6.456 |

| age | 0.080 |

| totChol | 0.002 |

| currentSmoker1 | 0.445 |

| education2 | -0.270 |

| education3 | -0.232 |

| education4 | -0.035 |

Log-Likelihood

Recall the log-likelihood function

where

AIC & BIC

Estimators of prediction error and relative quality of models:

Akaike’s Information Criterion (AIC)1:

Schwarz’s Bayesian Information Criterion (BIC)2:

AIC & BIC

First Term: Decreases as p increases

AIC & BIC

Second term: Increases as p increases

Using AIC & BIC

Choose model with the smaller value of AIC or BIC

If

AIC from the glance() function

Let’s look at the AIC for the model that includes age, totChol, and currentSmoker

Comparing the models using AIC

Let’s compare the full and reduced models using AIC.

Based on AIC, which model would you choose?

Comparing the models using BIC

Let’s compare the full and reduced models using BIC

Based on BIC, which model would you choose?

Drop-in-deviance test

Drop-in-deviance test

We will use a drop-in-deviance test (aka Likelihood Ratio Test) to test

the overall statistical significance of a logistic regression model

the statistical significance of a subset of coefficients in the model

Deviance

The deviance is a measure of the degree to which the predicted values are different from the observed values (compares the current model to a “saturated” model)

In logistic regression,

Note:

Test for overall significance

We can test the overall significance for a logistic regression model, i.e., whether there is at least one predictor with a non-zero coefficient

The drop-in-deviance test for overall significance compares the fit of a model with no predictors to the current model.

Drop-in-deviance test statistic

Let

where

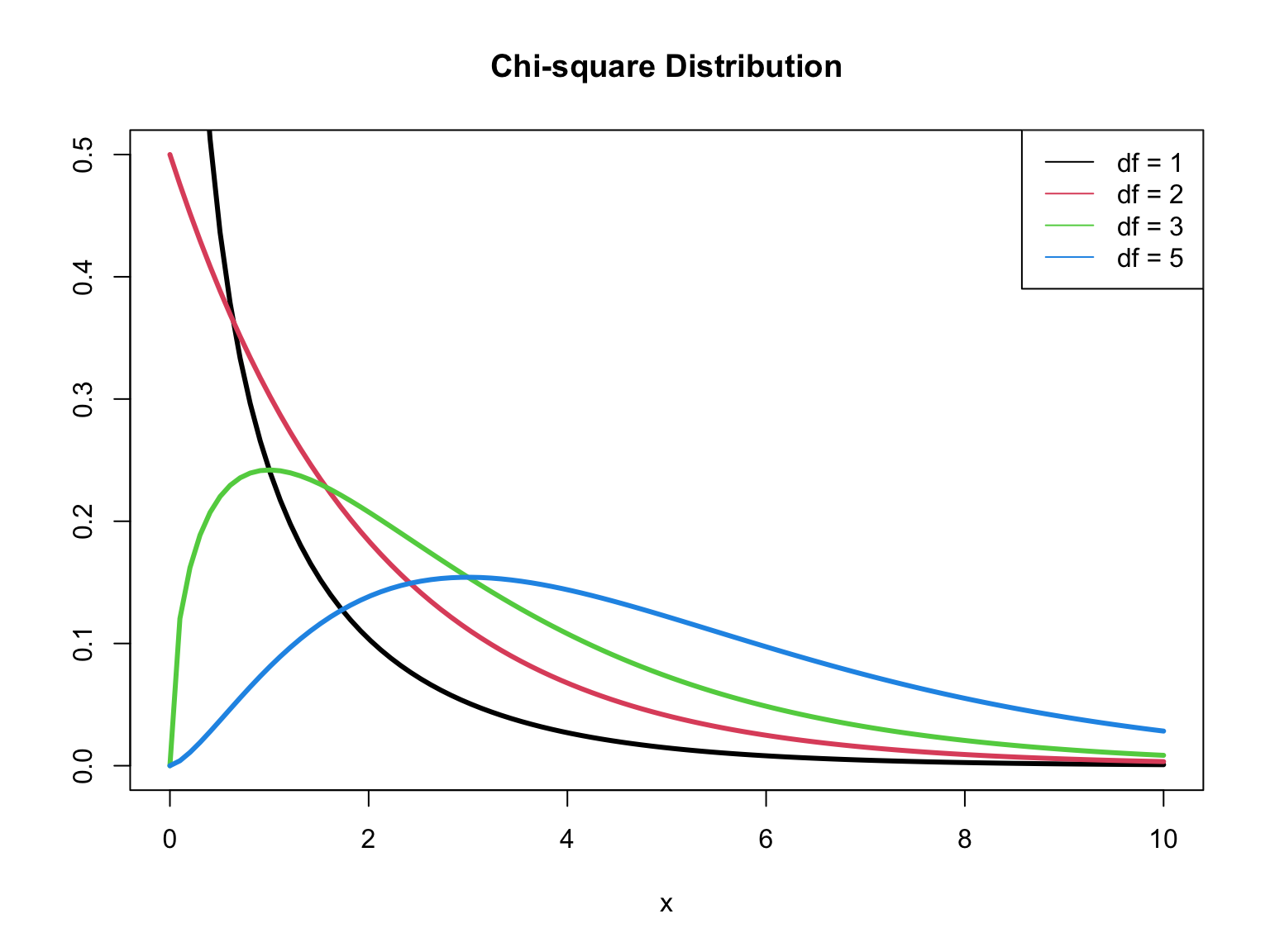

Drop-in-deviance test statistic

When

The p-value is calculated as

Large values of

Heart disease model: drop-in-deviance test

Heart disease model: drop-in-deviance test

Calculate the log-likelihood for the null and alternative models

[1] -1737.735[1] -1612.406Heart disease model: likelihood ratio test

Calculate the p-value

Conclusion

The p-value is small, so we reject

Why use overall test?

Why do we use a test for overall significance instead of just looking at the test for individual coefficients?1

Suppose we have a model such that

About 5% of the p-values for individual coefficients will be below 0.05 by chance.

So we expect to see 5 small p-values if even no linear association actually exists.

Therefore, it is very likely we will see at least one small p-value by chance.

The overall test of significance does not have this problem. There is only a 5% chance we will get a p-value below 0.05, if a relationship truly does not exist.

Test a subset of coefficients

Testing a subset of coefficients

Suppose there are two models:

Reduced Model: includes predictors

Full Model: includes predictors

We can use a drop-in-deviance test to determine if any of the new predictors are useful

Drop-in-deviance test

The test statistic is

The p-value is calculated using a

Example: Include education?

Should we include education in the model?

Reduced model:

age,totChol,currentSmokerFull model:

age,totChol,currentSmoker,education

Example: Include education?

Calculate deviances

Example: Include education?

Calculate p-value

What is your conclusion? Would you include education in the model that already has age, totChol, currentSmoker?

Drop-in-deviance test in R

Conduct the drop-in-deviance test using the anova() function in R with option test = "Chisq"

Add interactions with currentSmoker?

| term | df.residual | residual.deviance | df | deviance | p.value |

|---|---|---|---|---|---|

| high_risk ~ age + totChol + currentSmoker | 4082 | 3224.812 | NA | NA | NA |

| high_risk ~ age + totChol + currentSmoker + currentSmoker * age + currentSmoker * totChol | 4080 | 3222.377 | 2 | 2.435 | 0.296 |

Questions from this week’s content?

Recap

Introduced model comparison for logistic regression using

AIC and BIC

Drop-in-deviance test

References