Properties of estimators

Announcements

HW 03 due TODAY at 11:59pm

Project exploratory data analysis due TODAY at 11:59pm

- Next project milestone: Presentations in March 28 lab

Statistics experience due April 22

Questions from this week’s content?

Topics

- Properties of the least squares estimator

This is not a mathematical statistics class. There are semester-long courses that will go into these topics in much more detail; we will barely scratch the surface in this course.

Our goals are to understand

Estimators have properties

A few properties of the least squares estimator and why they are useful

Properties of

Motivation

We have discussed how to use least squares and maximum likelihood estimation to find estimators for

How do we know whether our least squares estimator (and MLE) is a “good” estimator?

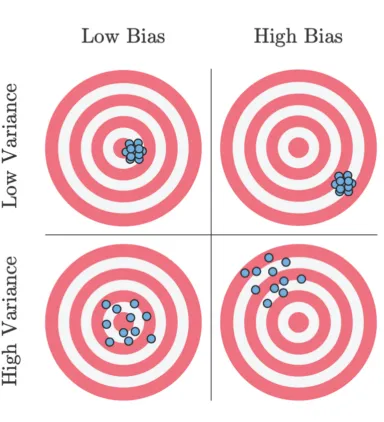

When we consider what makes an estimator “good”, we’ll look at three criteria:

- Bias

- Variance

- Mean squared error

Bias and variance

Suppose you are throwing darts at a target

. . .

Unbiased: Darts distributed around the target

Biased: Darts systematically away from the target

Variance: Darts could be widely spread (high variance) or generally clustered together (low variance)

Bias and variance

Ideal scenario: Darts are clustered around the target (unbiased and low variance)

Worst case scenario: Darts are widely spread out and systematically far from the target (high bias and high variance)

Acceptable scenario: There’s some trade-off between the bias and variance. For example, it may be acceptable for the darts to be clustered around a point that is close to the target (low bias and low variance)

Bias and variance

Each time we take a sample of size

Suppose we take many independent samples of size

The estimators are centered at the true parameter (unbiased)

The estimators are clustered around the true parameter (unbiased with low variance)

Properties of

Finite sample (

Unbiased estimator

Best Linear Unbiased Estimator (BLUE)

Asymptotic (

Consistent estimator

Efficient estimator

Asymptotic normality

Finite sample properties

Unbiased estimator

The bias of an estimator is the difference between the estimator’s expected value and the true value of the parameter

Let

An estimator is unbiased if the bias is 0 and thus

Expected value of

Let’s take a look at the expected value of least-squares estimator:

Expected value of

The least squares estimator (and MLE)

Variance of

. . .

We will show that

Gauss-Markov Theorem Proof

Suppose

Let

What is the dimension of

Gauss-Markov Theorem Proof

We need to show

Gauss-Markov Theorem Proof

What assumption(s) of the Gauss-Markov Theorem did we use?

What must be true for

Gauss-Markov Theorem Proof

Now we need to find

Gauss-Markov Theorem Proof

What assumption(s) of the Gauss-Markov Theorem did we use?

Gauss-Markov Theorem Proof

We have

. . .

We know that

. . .

When is

. . .

Therefore, we have shown that

Properties of

Finite sample (

Unbiased estimator ✅

Best Linear Unbiased Estimator (BLUE) ✅

Asymptotic (

Consistent estimator

Efficient estimator

Asymptotic normality

Asymptotic properties

Properties from the MLE

Recall that the least-squares estimator

Maximum likelihood estimators have nice statistical properties and the

- Consistency

- Efficiency

- Asymptotic normality

We will define the properties here, and you will explore them in much more depth in STA 332: Statistical Inference

Mean squared error

The mean squared error (MSE) is the squared difference between the estimator and parameter.

. . .

Let

. . .

Mean squared error

. . .

The least-squares estimator

Consistency

An estimator

. . .

This means that as the sample size goes to

Why is this a useful property of an estimator?

Consistency

Consistency of

. . .

Now we need to show that

What is

Show

. . .

Therefore

Efficiency

An estimator if efficient if it has the smallest variance among a class of estimators as

By the Gauss-Markov Theorem, we have shown that the least-squares estimator

Maximum Likelihood Estimators are the most efficient among all unbiased estimators.

Therefore,

Proof of this in a later statistics class.

Asymptotic normality

Maximum Likelihood Estimators are asymptotically normal, meaning the distribution of an MLE is normal as

Therefore, we know the distribution of

Proof of this in a later statistics class.

Recap

Finite sample (

Unbiased estimator ✅

Best Linear Unbiased Estimator (BLUE) ✅

Asymptotic (

Consistent estimator ✅

Efficient estimator ✅

Asymptotic normality ✅