# load packages

library(tidyverse)

library(tidymodels)

library(knitr)

library(Stat2Data) #contains data set

library(patchwork)

# set default theme in ggplot2

ggplot2::theme_set(ggplot2::theme_bw())Logistic Regression

Announcements

Project Presentations in lab on Friday, March 28

- Check email for presentation order and feedback assignments

Statistics experience due April 22

Questions from this week’s content?

Topics

Logistic regression for binary response variable

Use logistic regression model to calculate predicted odds and probabilities

Interpret the coefficients of a logistic regression model with

- a single categorical predictor

- a single quantitative predictor

- multiple predictors

Computational setup

Recap

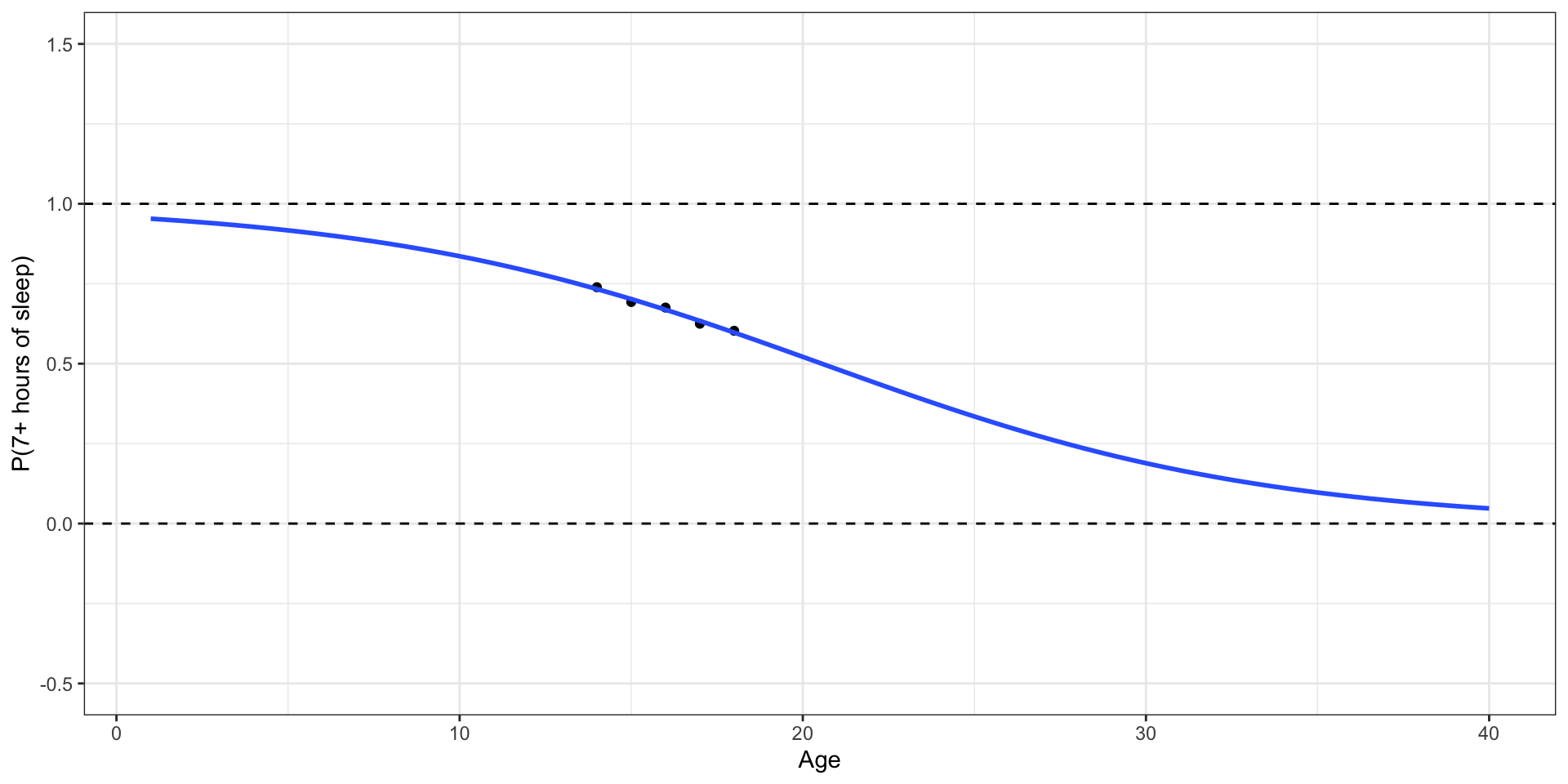

Do teenagers get 7+ hours of sleep?

Students in grades 9 - 12 surveyed about health risk behaviors including whether they usually get 7 or more hours of sleep.

Sleep7

1: yes

0: no

# A tibble: 446 × 6

Age Sleep7 Sleep SmokeLife SmokeDaily MarijuaEver

<int> <int> <fct> <fct> <fct> <int>

1 16 1 8 hours Yes Yes 1

2 17 0 5 hours Yes Yes 1

3 18 0 5 hours Yes Yes 1

4 17 1 7 hours Yes No 1

5 15 0 4 or less hours No No 0

6 17 0 6 hours No No 0

7 17 1 7 hours No No 0

8 16 1 8 hours Yes No 0

9 16 1 8 hours No No 0

10 18 0 4 or less hours Yes Yes 1

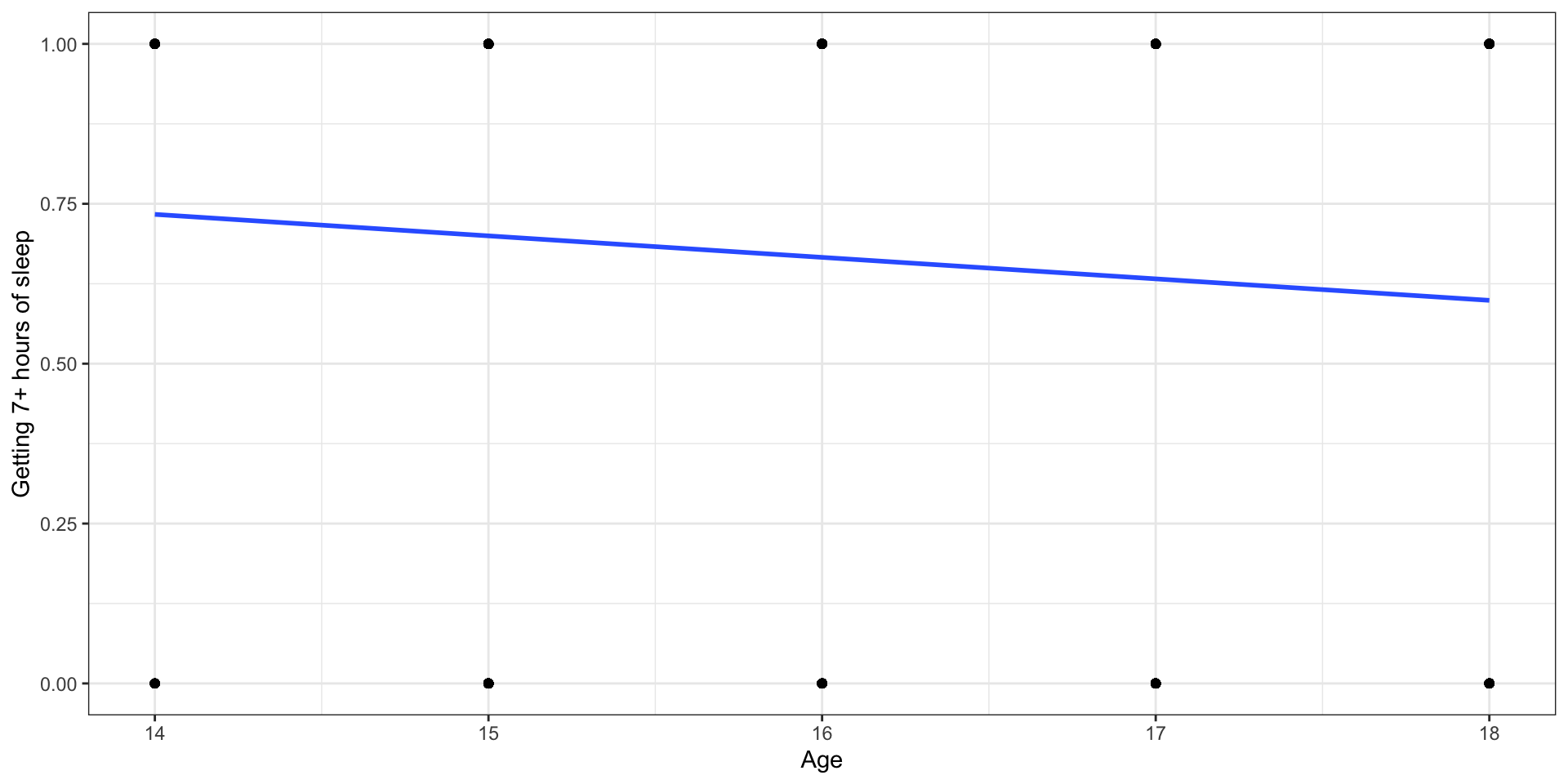

# ℹ 436 more rowsLet’s fit a linear regression model

Outcome:

Let’s use proportions

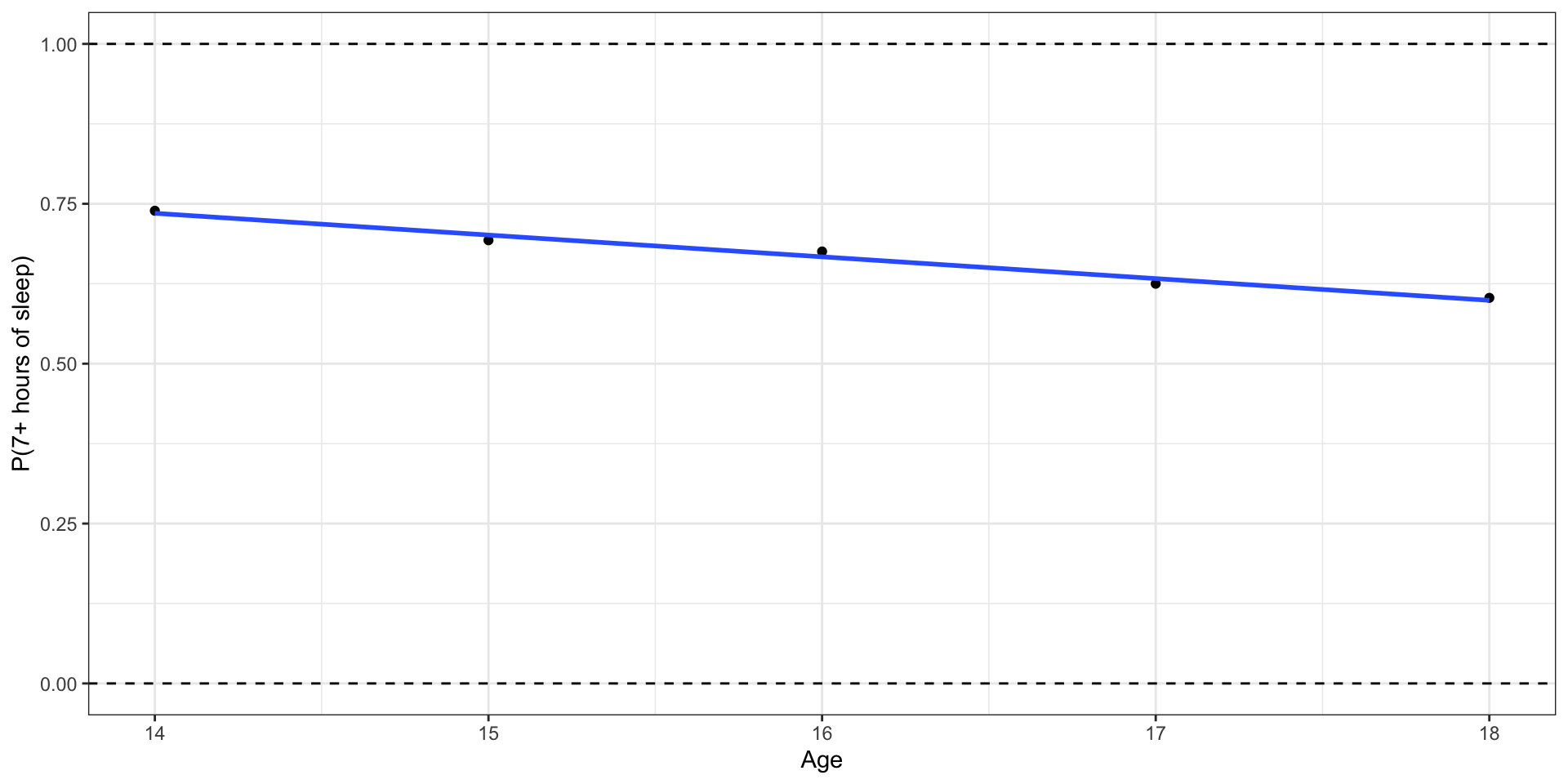

Outcome: Probability of getting 7+ hours of sleep

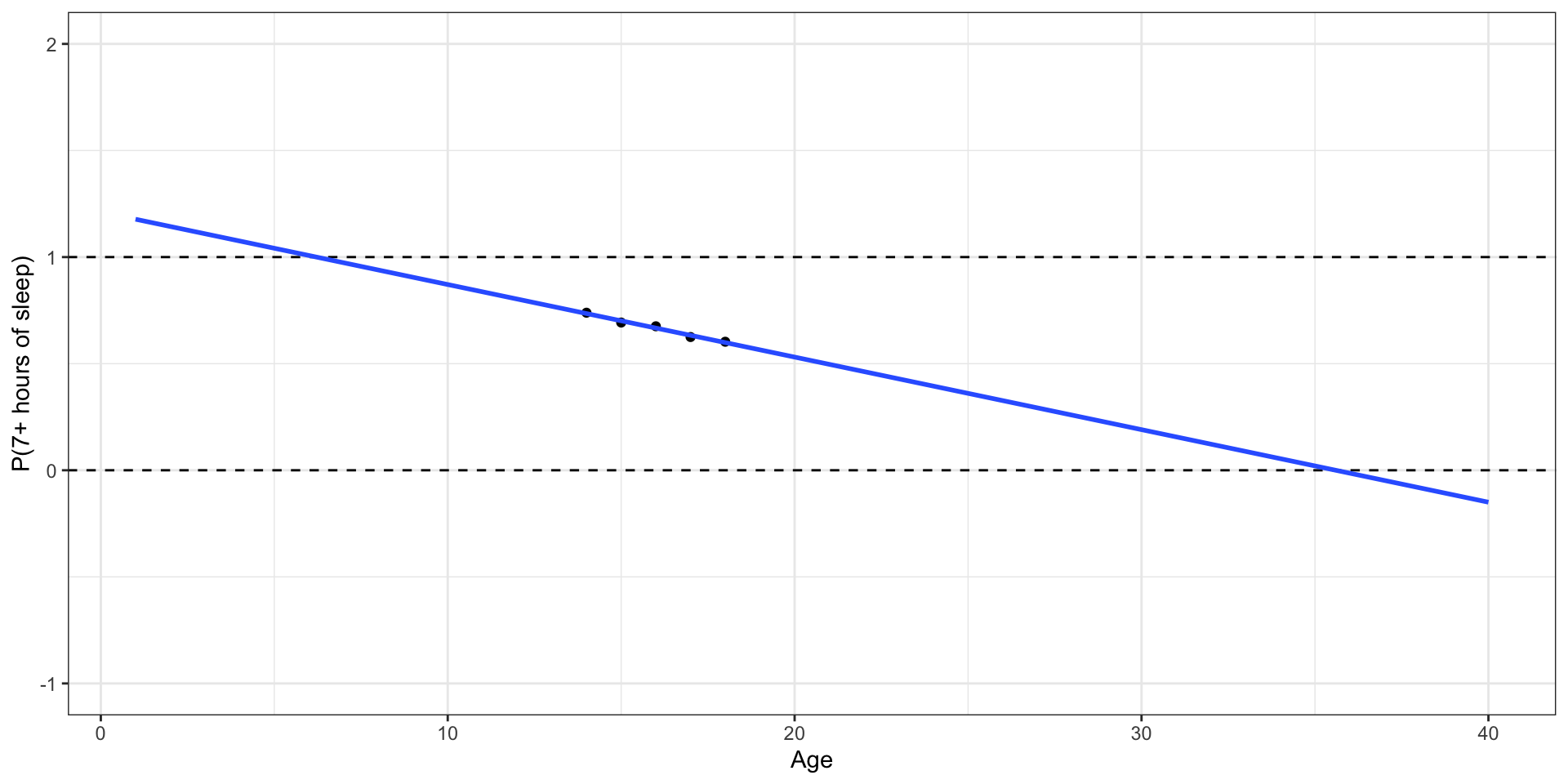

What happens if we zoom out?

Outcome: Probability of getting 7+ hours of sleep

🛑 This model produces predictions outside of 0 and 1.

Let’s try another model

✅ This model (called a logistic regression model) only produces predictions between 0 and 1.

Probabilities and odds

Binary response variable

- Go from

From odds to probabilities

- Logistic model: log odds =

- Odds =

- Combining (1) and (2) with what we saw earlier

. . .

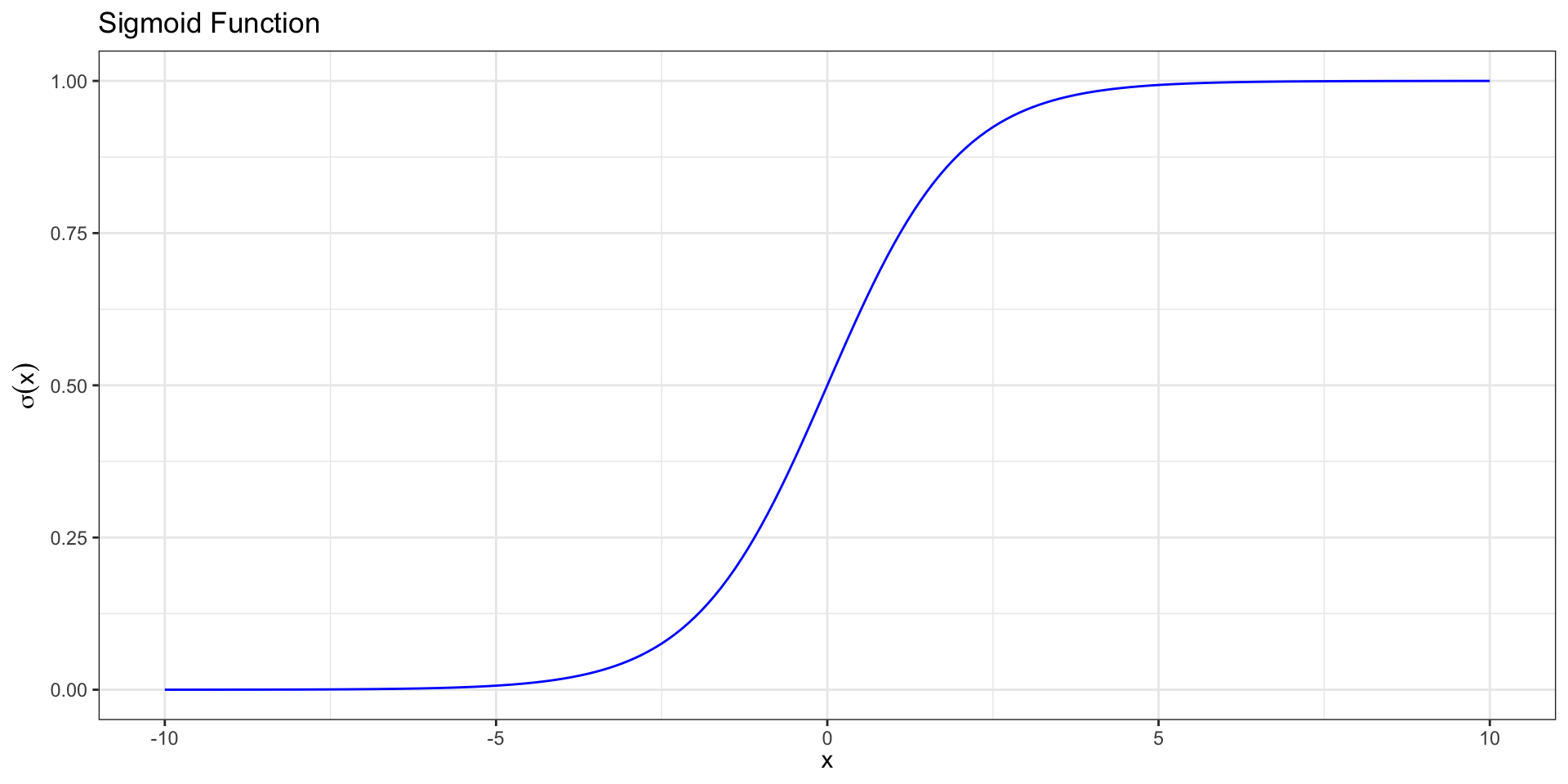

Sigmoid Function

We call this function relating the probability to the predictors a sigmoid function,

Sigmoid Function

. . .

Logistic regression

Logistic regression model

Logit form:

. . .

Probability form:

. . .

Logit and sigmoid link functions are inverses of each other.

More on link functions later, if time permits

Goal

We want to use our data to estimate

In this modeling scheme, one typically finds

Linear Regression vs. Logistic Regression

Linear regression:

Quantitative outcome

Estimate

Use

Logistic regression:

Binary outcome

Estimate

Use

Likelihood function for

. . .

. . .

Likelihood

The likelihood function for

. . .

We will use the log-likelihood function to find the MLEs

Log-likelihood

The log-likelihood function for

Log-likelihood

Plugging in

Finding the MLE

- Taking the derivative:

. . .

- If we set this to zero, there is no closed form solution.

. . .

- R uses numerical approximation to find the MLE.

Example

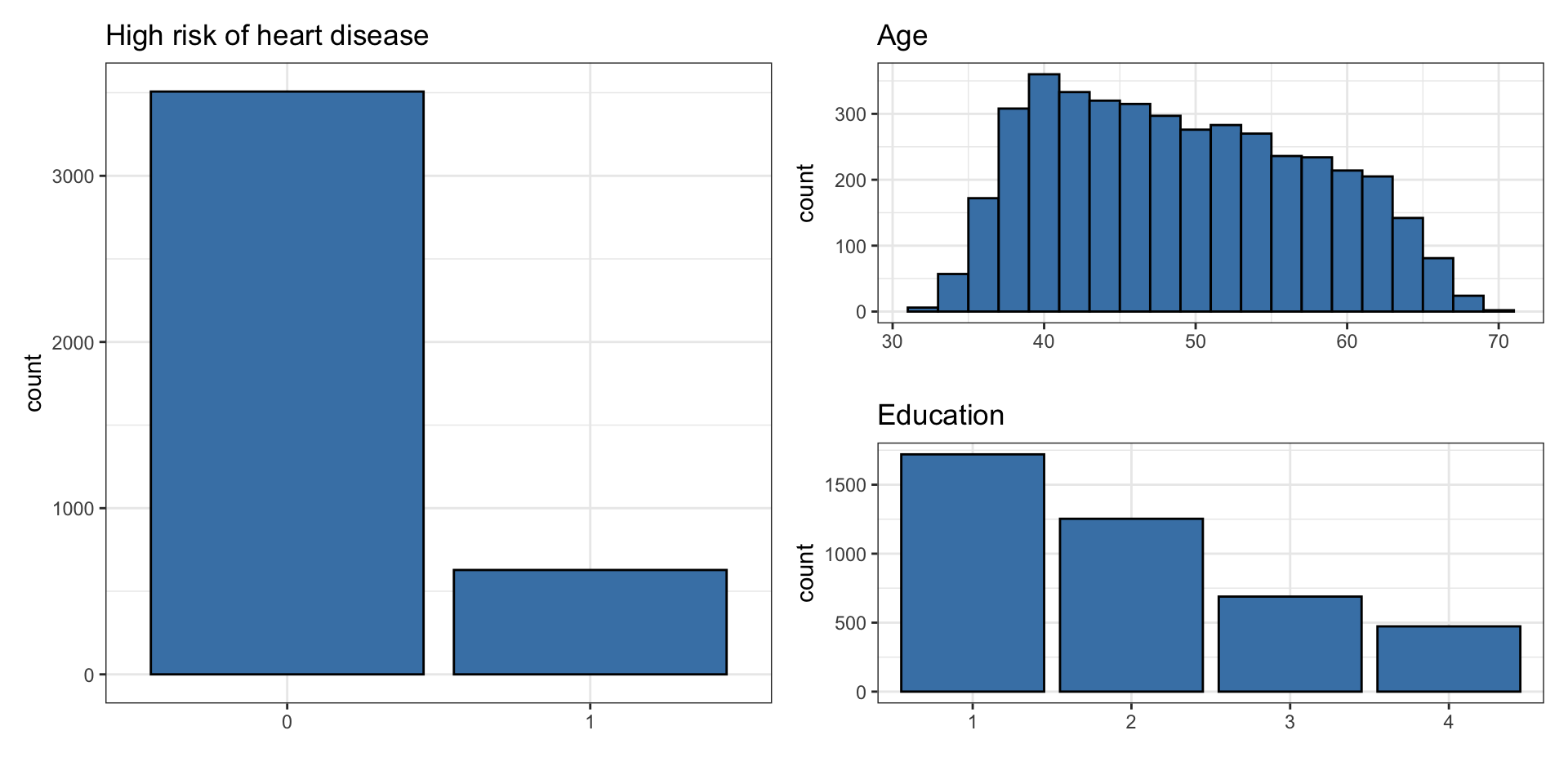

Risk of coronary heart disease

This data set is from an ongoing cardiovascular study on residents of the town of Framingham, Massachusetts. We want to examine the relationship between various health characteristics and the risk of having heart disease.

high_risk: 1 = High risk of having heart disease in next 10 years, 0 = Not high risk of having heart disease in next 10 yearsage: Age at exam time (in years)education: 1 = Some High School, 2 = High School or GED, 3 = Some College or Vocational School, 4 = College

Data: heart_disease

# A tibble: 4,135 × 3

age education high_risk

<dbl> <fct> <fct>

1 39 4 0

2 46 2 0

3 48 1 0

4 61 3 1

5 46 3 0

6 43 2 0

7 63 1 1

8 45 2 0

9 52 1 0

10 43 1 0

# ℹ 4,125 more rowsUnivariate EDA

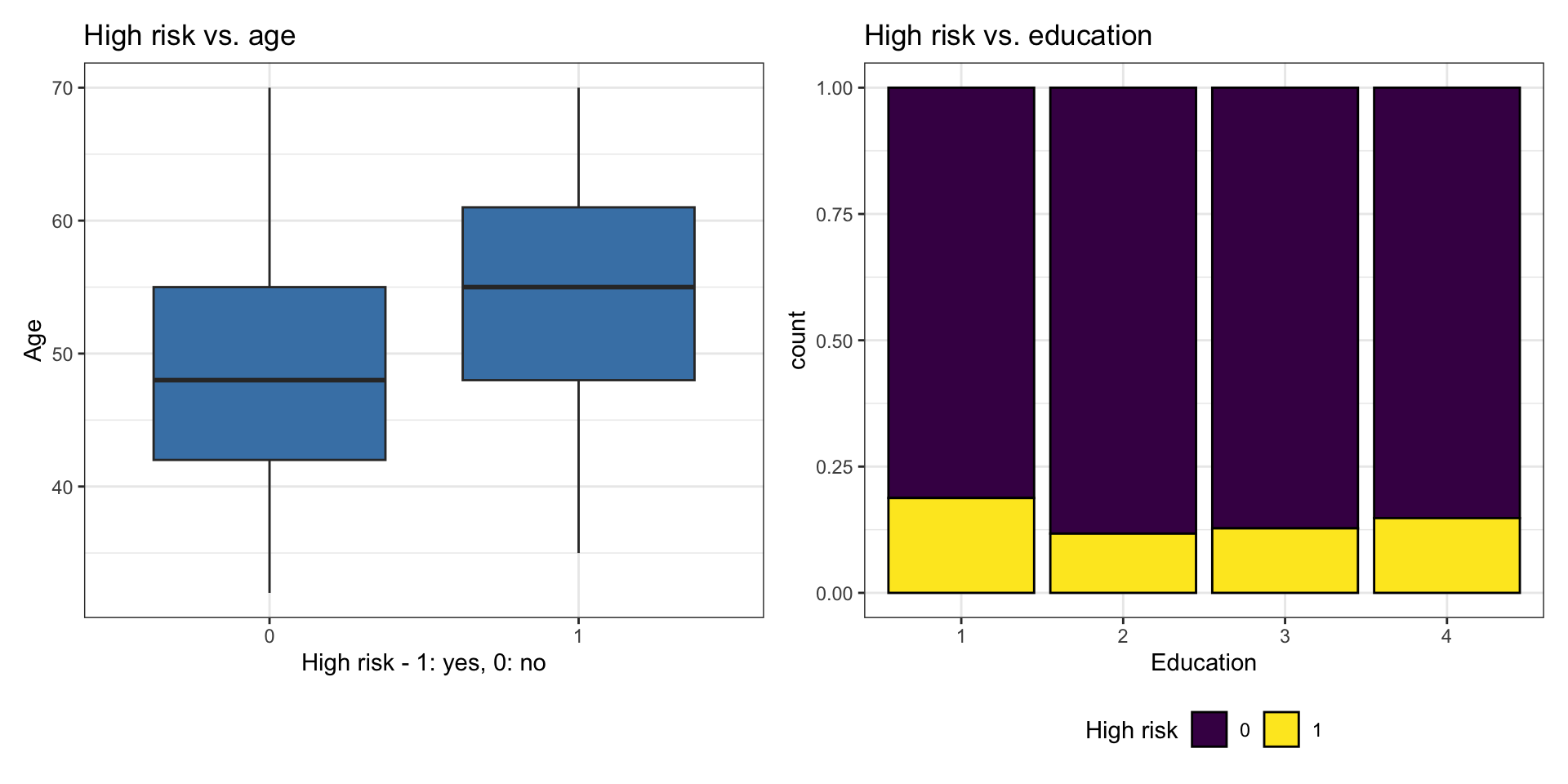

Bivariate EDA

Bivariate EDA code

{r}

#| echo: false

p1 <- ggplot(heart_disease, aes(x = high_risk, y = age)) +

geom_boxplot(fill = "steelblue") +

labs(x = "High risk - 1: yes, 0: no",

y = "Age")

p2 <- ggplot(heart_disease, aes(x = education, fill = high_risk)) +

geom_bar(position = "fill", color = "black") +

labs(x = "Education",

fill = "High risk") +

scale_fill_viridis_d() +

theme(legend.position = "bottom")

p1 + p2Let’s fit the model

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 |

| age | 0.073 | 0.005 | 13.385 | 0.000 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 |

Interpretation in terms of log-odds

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 |

| age | 0.073 | 0.005 | 13.385 | 0.000 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 |

education4: The log-odds of being high risk for heart disease are expected to be 0.020 less for those with a college degree compared to those with some high school, holding age constant.

. . .

We would not use the interpretation in terms of log-odds in practice.

Interpretation in terms of log-odds

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 |

| age | 0.073 | 0.005 | 13.385 | 0.000 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 |

age: For each additional year in age, the log-odds of being high risk for heart disease are expected to increase by 0.073, holding education level constant.

. . .

We would not use the interpretation in terms of log-odds in practice.

Interpretation in terms of odds

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 |

| age | 0.073 | 0.005 | 13.385 | 0.000 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 |

education4: The odds of being high risk for heart disease for those with a college degree are expected to be 0.98 (exp{-0.020}) times the odds for those with some high school, holding age constant.

In logistic regression with 2+ predictors,

Interpretation in terms of odds

| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -5.385 | 0.308 | -17.507 | 0.000 |

| age | 0.073 | 0.005 | 13.385 | 0.000 |

| education2 | -0.242 | 0.112 | -2.162 | 0.031 |

| education3 | -0.235 | 0.134 | -1.761 | 0.078 |

| education4 | -0.020 | 0.148 | -0.136 | 0.892 |

age: For each additional year in age, the odds being high risk for heart disease are expected to multiply by a factor of 1.08 (exp(0.073)), holding education level constant.

Alternate interpretation: For each additional year in age, the odds of being high risk for heart disease are expected to increase by 8%.

In logistic regression with 2+ predictors,

Generalized Linear Models

Introduction to GLMs

- Wider class of models.

- Response variable does not have to be continuous and/or normal.

- Variance does not have to be constant

- Still need to specify distribution of outcome variable (randomness).

- Does not require a linear relationship between response and explanatory variable. Instead, assumes linear relationship between the transformed expected response (ex.

Generalization of Linear Model

Linear model

. . .

GLM

. . .

We call

Examples of link functions

Linear regression

. . .

Logistic regression

. . .

Probit model

Prediction

Predicted log odds

heart_disease_aug =

augment(heart_edu_age_fit)

heart_disease_aug|> select(.fitted, .resid)|>

head(6)# A tibble: 6 × 2

.fitted .resid

<dbl> <dbl>

1 -2.55 -0.388

2 -2.26 -0.446

3 -1.87 -0.536

4 -1.15 1.69

5 -2.25 -0.448

6 -2.48 -0.402. . .

For observation 1

Predicted probabilities

heart_disease_aug$predicted_prob <-

predict.glm(heart_edu_age_fit, heart_disease, type = "response")

heart_disease_aug|>

select(.fitted,predicted_prob) |>

head(6)# A tibble: 6 × 2

.fitted predicted_prob

<dbl> <dbl>

1 -2.55 0.0726

2 -2.26 0.0948

3 -1.87 0.134

4 -1.15 0.240

5 -2.25 0.0954

6 -2.48 0.0775. . .

For observation 1

Predicted classes

# Convert probabilities to binary predictions (0 or 1)

heart_disease_aug <- heart_disease_aug |>

mutate(predicted_class = ifelse(predicted_prob > 0.5, 1, 0))

heart_disease_aug |>

select(predicted_prob, predicted_class) # A tibble: 4,135 × 2

predicted_prob predicted_class

<dbl> <dbl>

1 0.0726 0

2 0.0948 0

3 0.134 0

4 0.240 0

5 0.0954 0

6 0.0775 0

7 0.317 0

8 0.0887 0

9 0.172 0

10 0.0967 0

# ℹ 4,125 more rowsObserved vs. predicted

What does the following table show?

heart_disease_aug|>

count(high_risk, predicted_class)# A tibble: 2 × 3

high_risk predicted_class n

<fct> <dbl> <int>

1 0 0 3507

2 1 0 628. . .

The predicted_class is the class with the probability of occurring higher than 0.5. What is a limitation to using this method to determine the predicted class?

Recap

Reviewed the relationship between odds and probabilities

Introduced logistic regression for binary response variable

Interpreted the coefficients of a logistic regression model with multiple predictors

Introduced generalized linear model